MOODetector

A System for Mood-based Classification and Retrieval of Audio Music | May 2010 - November 2013 (42 months) | Financed by the FCT: 77 304 €

Project website - FCT PTDC/EIA-EIA/102185/2008

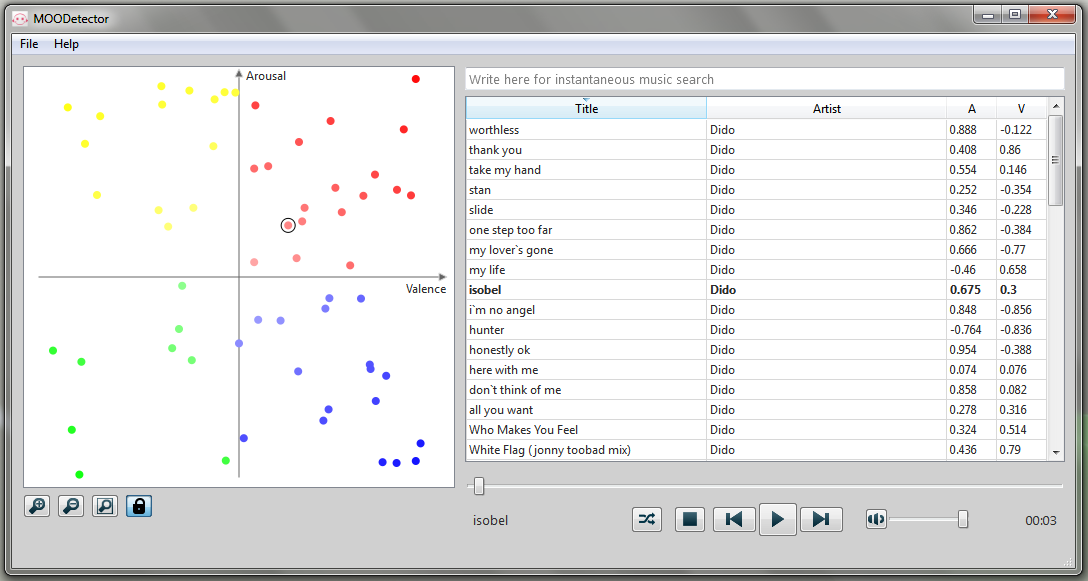

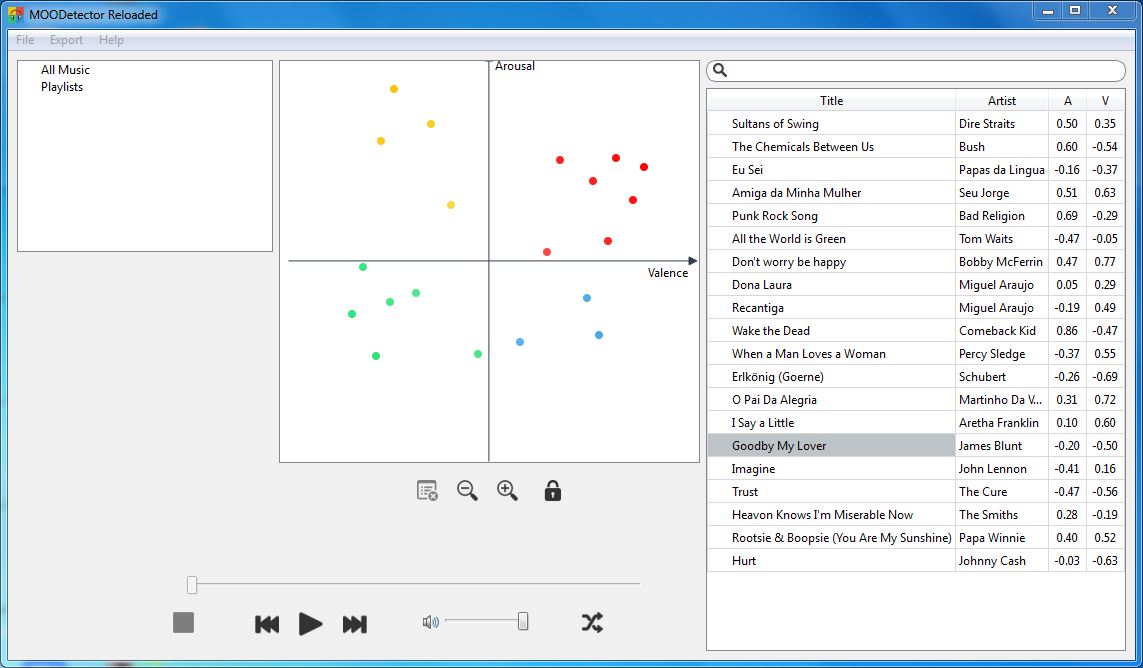

The main goal of this project was to develop music emotion recognition solutions based on audio signals and song lyrics. In addition, it also served to develop a computational prototype to query songs and generate playlists based on their emotional content.

I was a research fellow at the project after my master’s thesis, with the main goal of researching methodologies of analysis and classification of emotion based on audio signals. The main contributions of the project were the proposal of novel emotionally relevant audio (my Ph.D.) and lyrical features, as well as new public datasets to be used by researchers in the field. In addition to the main prototype, I also collaborated in the development of EWA – Emotion Web Annotator, used to gather annotations for datasets, and MOODoke, which uses karaoke videos from YouTube to analyse the emotional content of lyrics.