Exploring emotional information in music

Challenges of Machine Learning approaches

Renato Panda

September 8, 2021

About Me

Renato Panda, PhD

I. Researcher @ Ci2.IPT

C. Researcher @ CISUC (MIRlab)

- Emotion Recognition

- Information Retrieval

- Applied Machine Learning & Software Engineering

What is Music Information Retrieval?

The interdisciplinary science of retrieving information from music. Small but growing field of research with many real-world applications.

Music Classification

Categorizing music items by genre, emotion, artist, and so on.

Music Recommendation (Spotify)

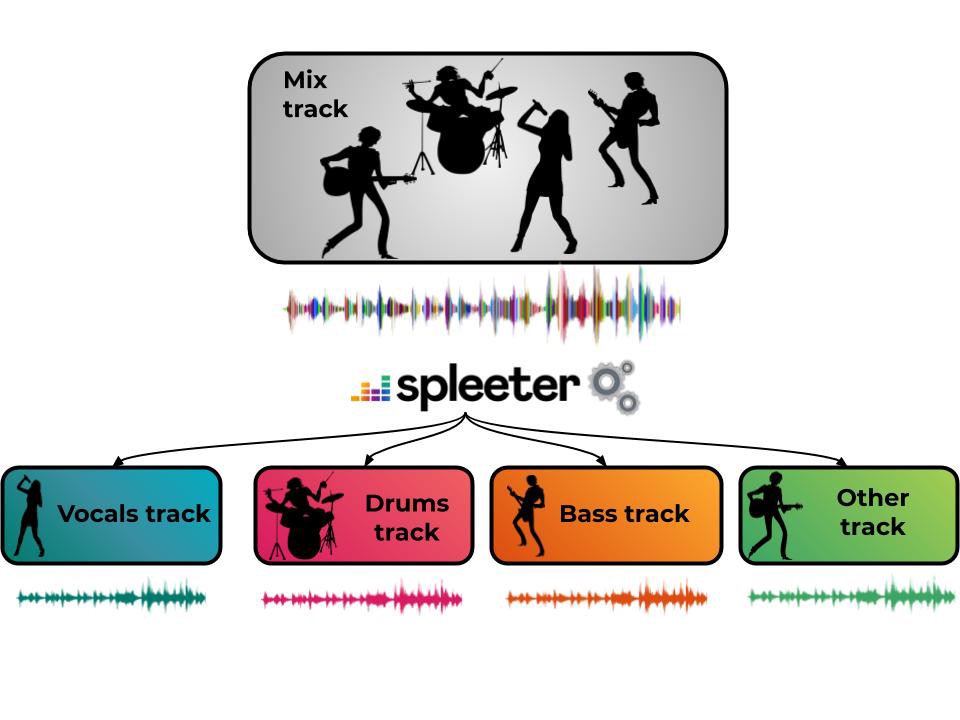

Music Source Separation and Recognition

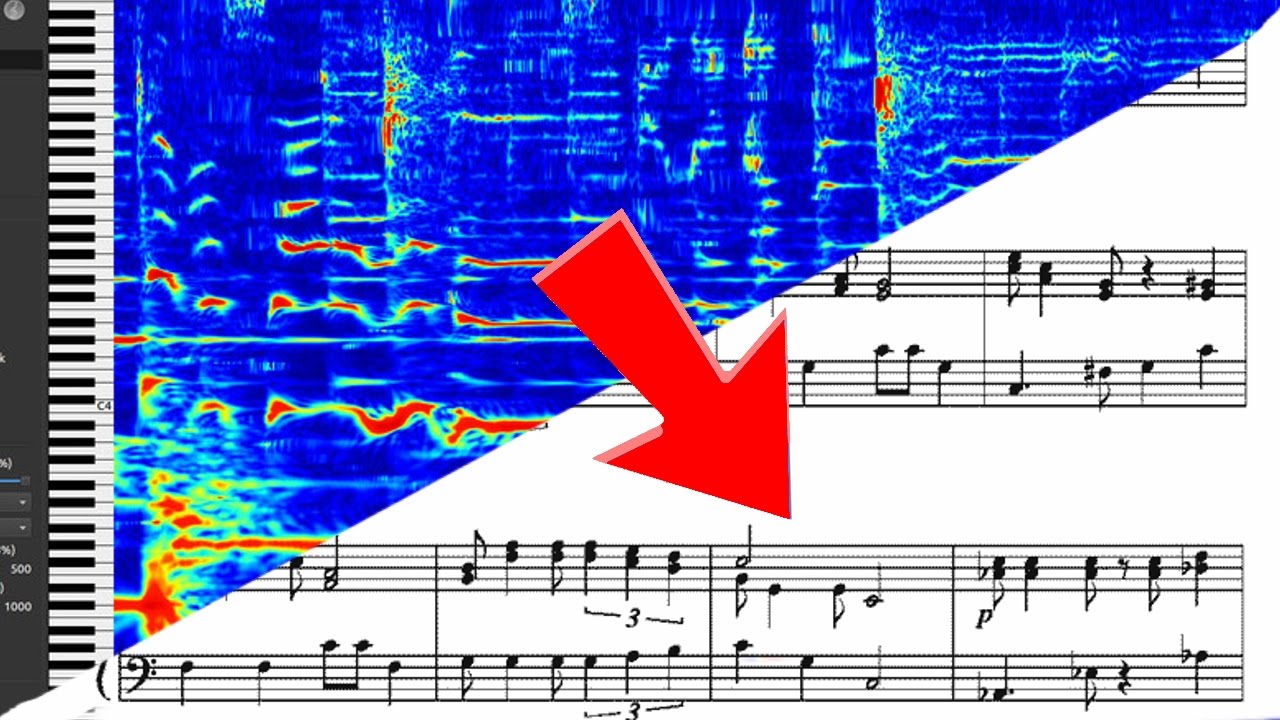

Automatic Music Transcription

Audio Fingerprinting

Music Search and Discover

What is Music Emotion Recognition?

Subfield of MIR that deals with emotion.

Why MER?

The Information Age

Music distribution methods changed drastically in the last decades.

Streaming services provide millions of songs.

Information Overload

How do we browse such collections effectively?

What do we know about music?

Music has been with us since our prehistoric times, serving as kind of a “language of emotion”.

Regarded as music’s primary purpose (Cooke, 1959), the “ultimate reason why humans engage with it” (Pannese et al., 2016).

How MER Works?

Bridges several different fields such as music theory, psychology and computer science (DSP and ML).

Music as in Audio? Lyrics? Scores?

What is Sound and Music?

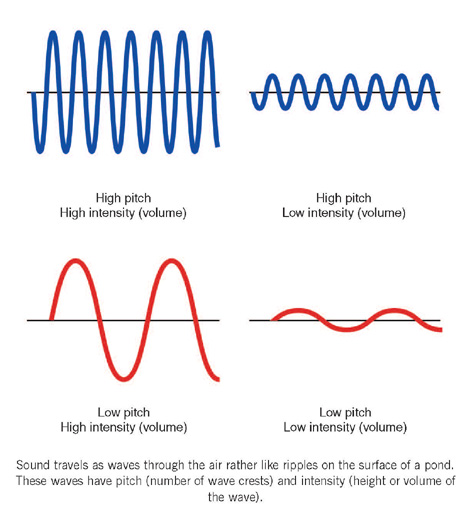

Musical Notes & Wave Properties

Emotion and Emotion Taxonomies

What is Emotion?

A short experience in response to a [musical] stimulus.

Context and Subjectivity

Basic emotions are universal (Ekman, 1987)

… but also subjective and context-dependent.

We focus on perceived emotion (!= felt).

How do we classify emotions?

- Basic emotions (Ekman, 1992)

- Hevner’s adjective circle (Hevner, 1936)

- MIREX AMC Task (Hu et al., 2007)

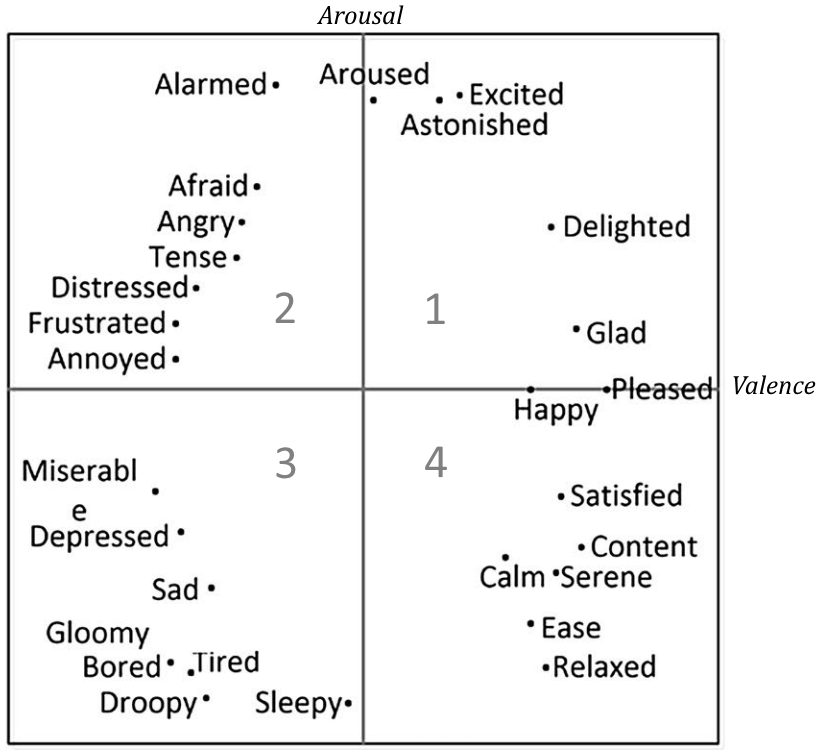

How do we classify emotions?

- Russell’s model (Russell, 1980)

- Valence (pleasure-displeasure) and arousal

- Thayer’s model (Thayer, 1989)

- Energetic arousal vs. tense arousal

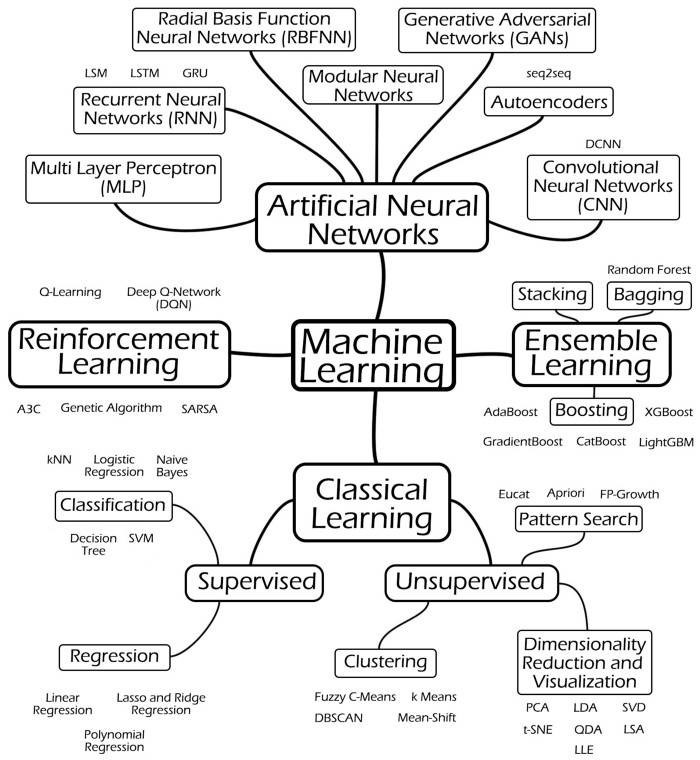

MER and Machine Learning

Machine Learning? Deep Learning?

Dataset Collection

MER Datasets

Most are very small (<1000 songs) or large (1M songs) but with low quality annotations (uncontrolled)

- 2018 - 4QAED, 900 clips, 4 classes (validated)

- 2016 - DEAM, 1802 songs, AV using MTurk (low quality)

- 2013 - MIREX-like, 903 songs (30-sec clips), 764 lyrics and 193 MIDI files, 5 classes (non-validated)

- 2012 - DEAP120, 120 song/videos + EEGs + biosignals + questionnaires, AV

- 2011 - MSD, nearly 1 million song entries and features, Last.FM uncontrolled tags

Building a MER Dataset

Building a MER Dataset - Human Validation

Feature Extraction

Zero Crossing Rate

A simple indicator of noisiness consists in counting the number of times the signal crosses the X-axis (or, in other words, changes sign).

Tempo and Tempogram

Machine Learning

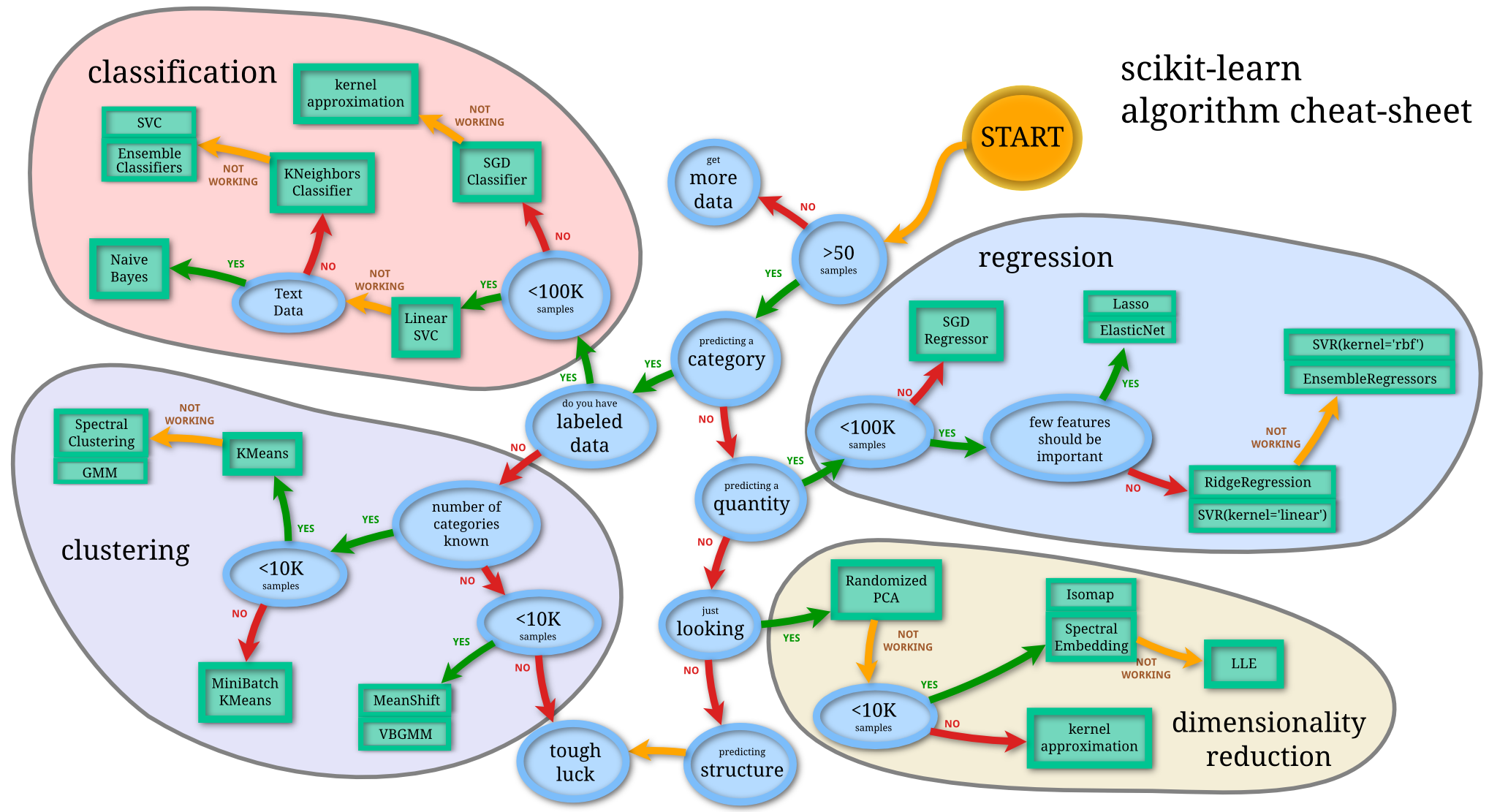

ML Algorithms

A: Hints & Experimentation

Novel Contributions

Novel Emotionally-relevant Audio Features

From Audio Signal to Notes

- A sequence of f0s and saliences

- Overall note (e.g., A4)

- Note duration (sec)

- Starting and ending time

Novel Emotionally-Relevant Audio Features

- The notes’ data is exploited to model higher level concepts, e.g.,:

- Musical Texture

- Number of lines (thickness), transitions

- Expressive techniques

- Articulation

- Glissando

- Vibrato and Tremolo

- Melody, dynamics and rhythm

- Related with note pitch, intensity and duration statistics

- Musical Texture

- Also explored the voice-only signal in sad/happy songs

Several Interesting Results

- Novel features significantly improve results (8.6%)

- High arousal songs are easier to classify

- Low arousal (calm happy vs. sad songs) are difficult

- Voice-only signal seems relevant for these (sad vs calm)

Ongoing Work

- Audio/lyrics and Deep Learning

- Emotion Classification

- Transfer Learning

- Data Augmentation

- Two FCT projects approved (2021)

- Music Emotion Recognition - Next Generation (PTDC/CCI-COM/3171/2021)

- Playback the music of the brain - decoding emotions elicited by musical sounds in the human brain (EXPL/PSI-GER/0948/2021)

- Development of Software Prototypes

Bridging Fundamental and Applied Research

MER Web Concept

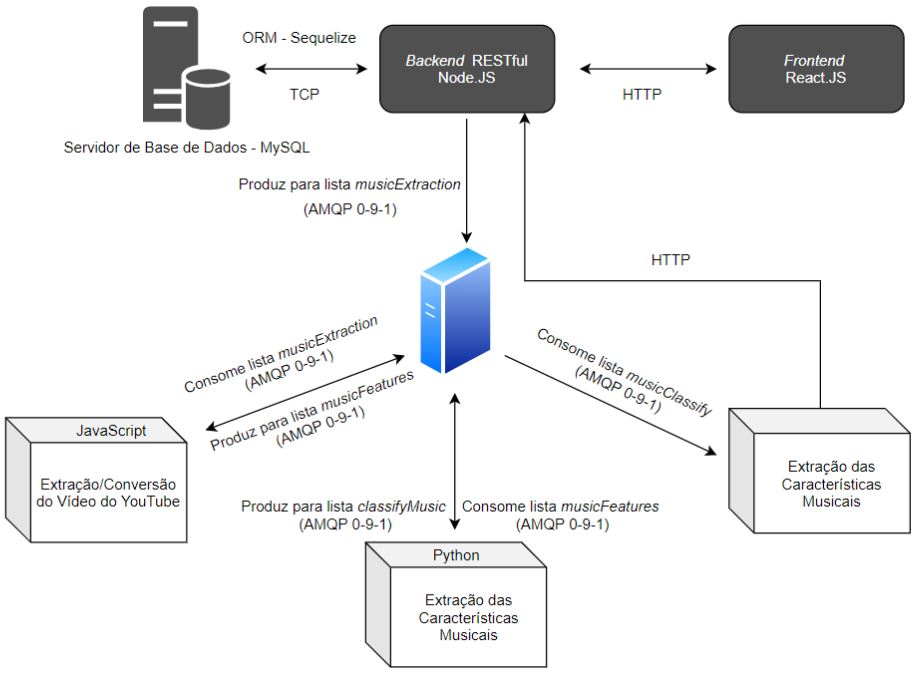

A distributed MER system proof-of-concept using microservices

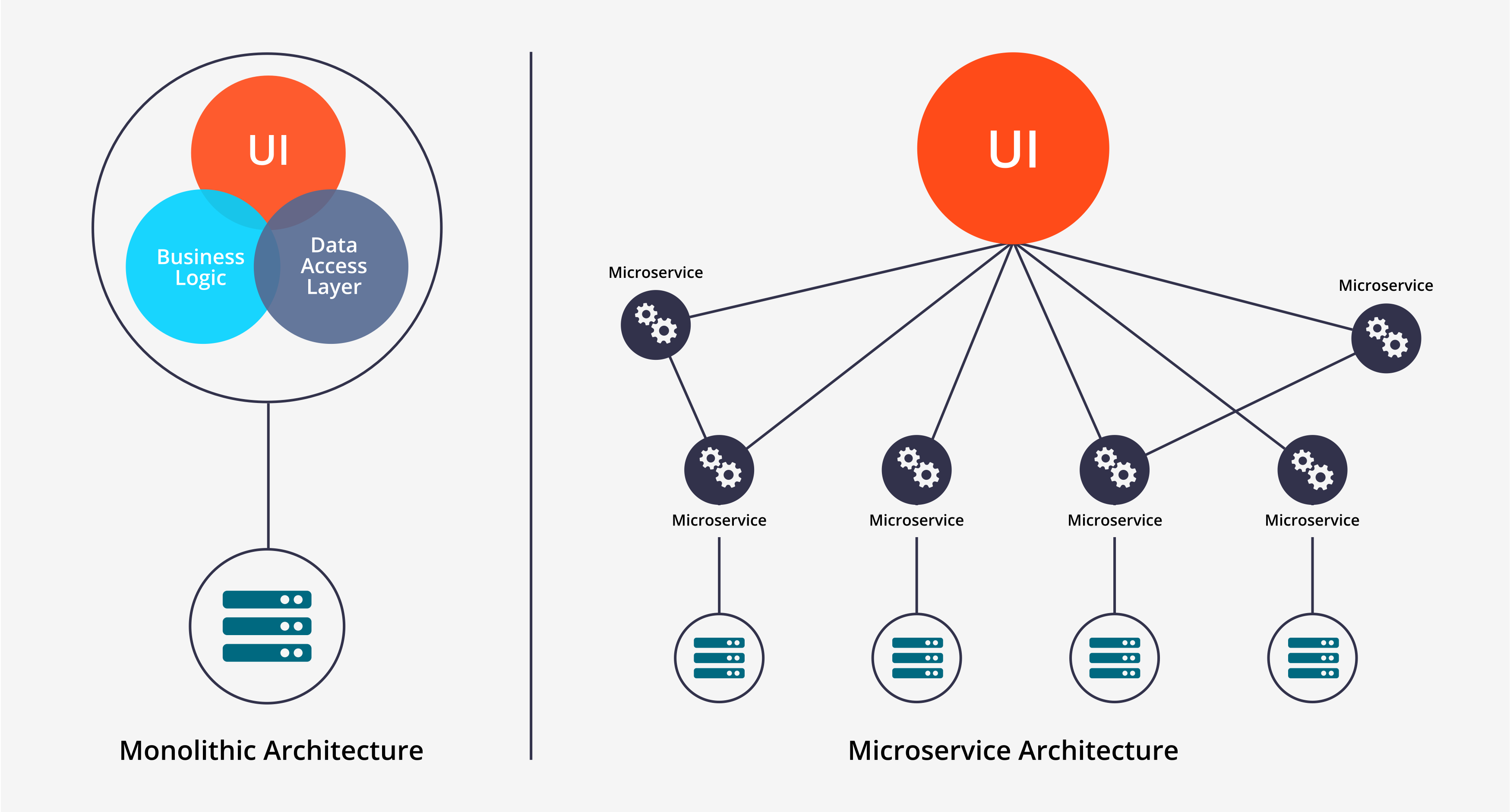

Monolith vs. Microservices

MER Web Concept (Architecture)

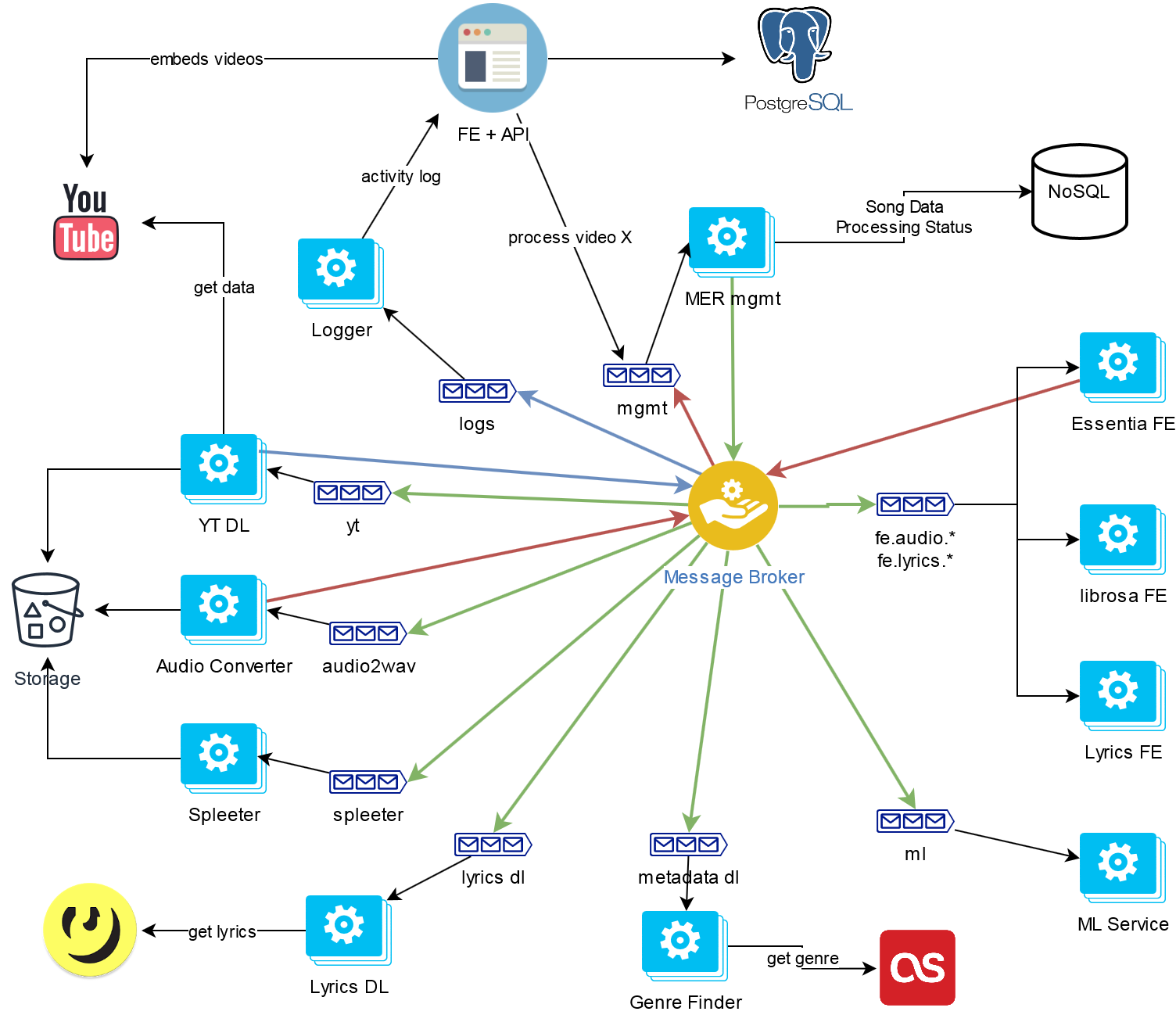

EmoTube (WIP)

Complex MER platform fusing audio (raw signals), lyrics (text), source separation, signal segmentation and more. Built as containerized microservices (docker), with automated tests+builds, paralelization via brokers, orchestrated which kubernetes, deployed on private cloud.

João Canoso (MSc)

Cloud Architect / DevOps Engineer

Full-Stack Developer

Tiago António (MSc)

Machine Learning Engineer

Full-Stack Developer

EmoTube (WIP) Architecture

Expenses-OCR

Mobile App using OCR to process invoices

Expenses-OCR

Expenses-OCR

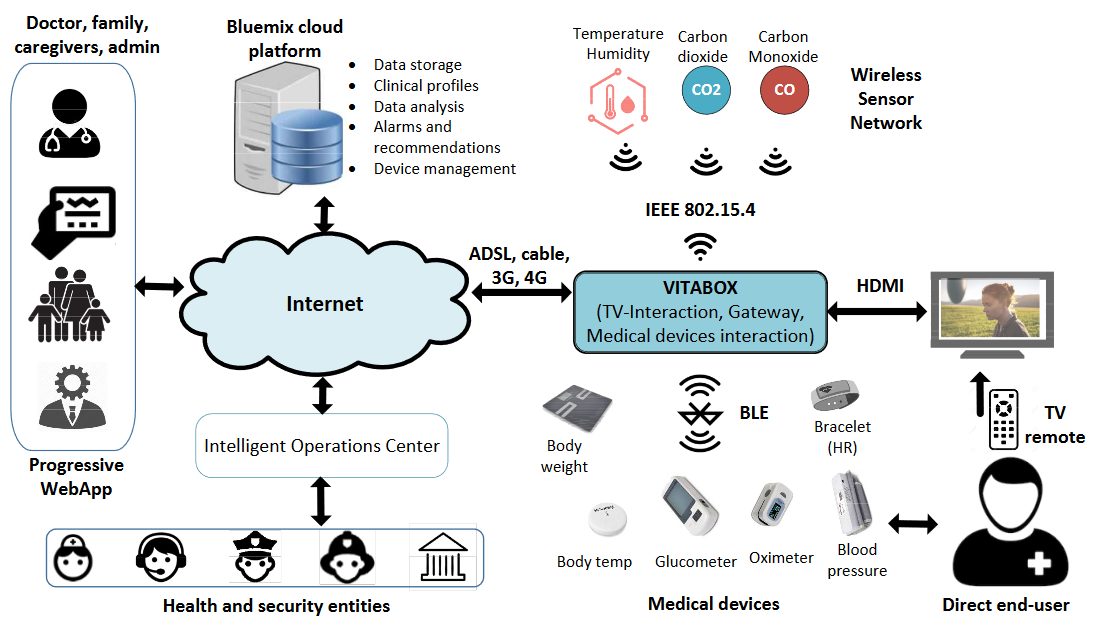

VITASENIOR

Telehealth solution to monitor and improve health care for the elderly living in isolated areas

Others

MOVIDA, Strate App, MovTour